Lies, damned lies, and benchmarks.

Note: the GitHub code has since been updated. You can see the implementation this post is referring to by viewing the “1.0” tag.

I have been investigating various methods of statistically inferring synonyms from natural language corpora. As a part of my investigation, I implemented a method based on collocation counting, where two words are considered likely to be synonyms if they tend to occur close to each other in the training corpus. Since counting collocations is a CPU and memory intensive problem, I implemented my solution in two languages which I knew had excellent compilers: C++ (G++) and Haskell (GHC). I then benchmarked the compiled binaries in terms of speed and memory use. Both of my implementations were algorithmically equivalent and emphasized simplicity and ease of development over performance. As such I believe they provide a measure of relative performance that users of C++ and Haskell can expect from similar programs without manual optimizations. In this article I present the results of my evaluation.

Background

When using collocations for synonym inference, it is assumed that two words and are more likely to have a related meaning if they tend to occur close together. This is captured by a metric called mutual information [1]:

The individual probabilities can be estimated from data. For simplicity, assume we are using maximum likelihood estimates based on some language corpus . then becomes the frequency of in , and the frequency of occurring in close proximity to . What constitutes proximity depends on the implementation. One popular approach is to use absolute word distance: and are considered in each other’s proximity if they are separated by at most words, being a parameter of the model. Another approach is to use sentence distance, defined analogically. In my implementation I use sentence distance with . I furthermore ignore words in the current sentence and only consider words in adjacent sentences as neighbors (e.g. if the words “a” and “b” are present in the current sentence but “b” is not in any adjacent sentence, “a” and “b” will not be considered neighbors).

An adept reader will notice that a key element of estimating mutual information is counting proximal word pairs in a language corpus. Conceptually, this is a simple problem: for each word in the corpus and for each word near produce the count and then tally up the counts. In practice, however, the large size of the language corpus makes this a lengthy and memory-consuming operation. It is easy to see why: assuming a dictionary of possible words, in the worst case we will produce counts for each possible pair – a total of count pairs. Even for a modest dictionary of size 10000, this amounts to 10 billion count pairs.

When implementing a collocation counter, it is therefore imperative to choose a programming language and a compiler that produce efficient code. While C/C++ (G++) are natural candidates, according to the The Computer Language Benchmarks Game Haskell (GHC) is a worthy contender, being on average only 2.5x slower than G++ on a wide set of benchmarks. This seems like a small price to pay for the niceties of the language.

However, the data from The Computer Language Benchmarks Game can be a bit misleading as it only compares the fastest implementations in each language. As such, it only provides kind of an upper bound on the performance that a user can expect from a compiler. While tremendously useful in many application, it does not reflect the performance of a typical, idiomatic program in each language. This “typical performance” is what I attempted to measure in this article for the particular use case of counting collocations.

Implementation

The source code of my C++ and Haskell collocation counters is available on GitHub. Both implementations follow the same general algorithm:

get_counts(sentence, context): count_list=[] for w in words(sentence): for v in unique_words(context): count_list.append((w,v,1)) return count_list count_colocations(input): counts = new HashMap counts[w1 <- V, w2 <- V] = 0 for paragraph in input: for sentence in paragraph: context = get_adjacent_sentences(sentence, paragraph) for (w,v,c) in get_counts(sentence, context): counts[w,v] += c return sort_by_words(to_association_list(counts)) |

The only thing of note is that get_counts returns a list of counts instead of directly accumulating the counts in the hash map, which is arguably non-idiomatic in the imperative C++. I made this particular choice to keep get_counts pure in Haskell, which would otherwise have to use Data.HashTable in the IO monad.

Both C++ and Haskell implementations read all their input into memory first (albeit Haskell does this lazily) from stdin and print the counts to stdout, one per line.

Results

I evaluated both implementations on an excerpt of plaintext Wikipedia articles generated with AutoCorpus. The full dataset is available in the GitHub repository as data/input.txt. The test system was a 2.4GHz quad-core Q6600 Linux box with 6GB of memory running 64-bit Ubuntu 11.10. The benchmarks were compiled with -O2 optimizations using G++ 4.6.1 and GHC 7.0.3. Unless noted otherwise, all presented data points have been averaged over 30 runs. For individual run results, see data/collocations-{cpp,hs}-times.csv in the git repository.

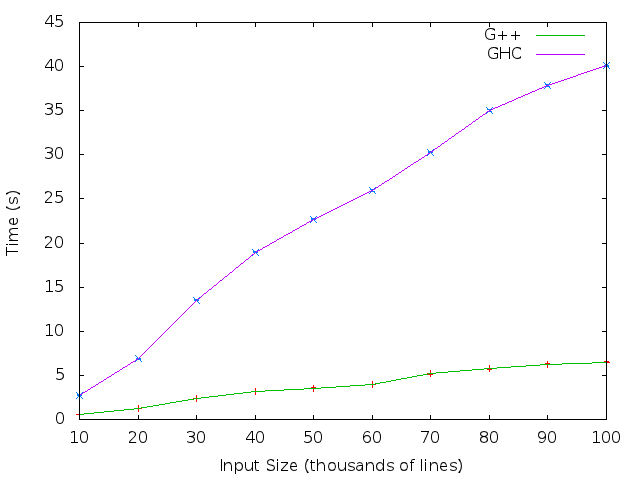

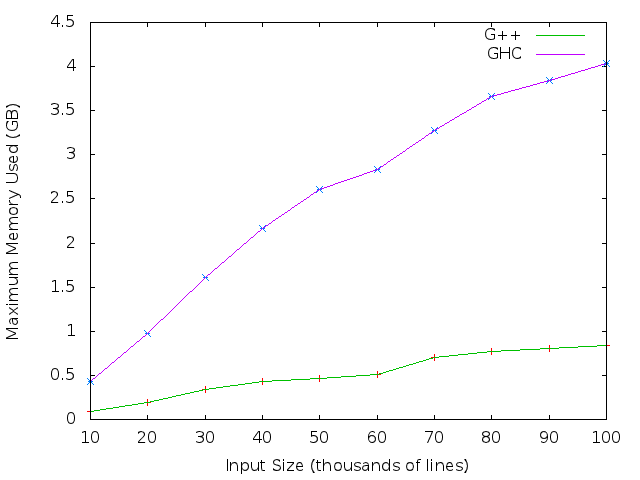

Figures 1 and 2 show the time and maximum memory used by the C++ and Haskell implementations as a function of input size in thousands of lines. Standard deviation was minimal and, while shown, cannot be discerned in the plots. Since both implementations ran essentially the same algorithm, the overall shape of the time and memory curves is identical as expected. On average, Haskell was 5.91x slower than C++ on the same input and used 4.95x more memory. This relationship held to within 0.9x regardless of the size of the input.

Figure 1: Time taken by the C++ (G++) and Haskell (GHC) implementations as a function of input size. On average Haskell was 5.91x slower than C++ for the same input.

Figure 2: Maximum memory used by the C++ (G++) and Haskell (GHC) implementations as a function of input size. On average Haskell used 4.95x more memory than C++ for the same input.

Tables 1 and 2 show the costliest calls (cumulative time) for the C++ and Haskell binaries for an input of 20 thousand lines. Please take these results with a grain of salt as they show only one run with compiler optimizations turned off. Due to space constraints, only the top functions are shown. For full information, the curious reader is welcome to look at the gprof and GHC profiler outputs. The visualized C++ call graph is also available here.

| function | cumulative time (%) |

|---|---|

| main | 100 |

| documentCounts | 98.7 |

| paragraphCounts | 54.9 |

| sentenceCounts | 38.7 |

| std::sort | 31.7 |

| words | 29.1 |

Table 1: Costliest calls (cumulative time) of the C++ collocation counter. For function details, see Colocations.cpp.

| function | cumulative time (%) |

|---|---|

| main | 100 |

| documentCounts | 48.7 |

| paragraphCounts | 28.1 |

| sentenceCounts | 27.9 |

| sentenceToSet | 22.5 |

| aggregateCounts | 19.5 |

Table 2: Costliest calls (cumulative time) of the Haskell collocation counter. For function details, see Colocations.hs.

The time profiles of the two programs are very similar. The difference between the times spent in documentCounts is due to the C++ implementation performing sorting inside that method, while the Haskell implementation does it directly in main. Curiously, the profiler indicates that over 70% of the individual time of the Haskell program was spent in the GC module (see GHC profiler output), suggesting that garbage collection might be the culprit of Haskell’s slower performance.

Conclusions

A naive collocation counting algorithm in Haskell (GHC) was 5.91x slower and used 4.95x more memory than the same algorithm implemented in C++ (G++). The interpretation of these results is up to the reader. Personally, I think the slowdown is a reasonable trade-off for the productivity benefits of Haskell as a language, and I suspect it is still significantly faster than an equivalent algorithm implemented in a popular scripting language like Python, Perl or Ruby (although I have no data to back up that claim ;-)).

References

- M. Baroni, S. Bisi. Using cooccurrence statistics and the web to discover synonyms in a technical language. Proc. of the Fourth International Conference on Language Resources and Evaluation (LREC 2004). 2004.

20 responses

Do you want to comment?

Comments RSS and TrackBack Identifier URI ?

Trackbacks