My previous post, Counting Collocations: GHC and G++ benchmarked, created a bit of a controversy in the Haskell subreddit. The most common complaint was that the C++ and Haskell programs used different data structures which made the comparison unfair. Many people offered advice on how the Haskell code could have been improved in this regard. The top suggestions were:

- replace

StringwithTextorByteString - replace

Data.HashTablewithData.HashMapfrom theunordered-containerspackage

The first item was suggested because String operations are known to be notoriously slow, and any text processing application should use Text or ByteString instead. These alternatives are also closer to the array-of-bytes string representation in C++ and would provide a more fair comparison. Likewise, Data.HashTable is not a top performer and faster implementations exist. While I think these are very valid suggestions, let me digress for a moment and explain why I chose String and Data.HashTable in the first place.

My goal in the original article was not to come up with the fastest possible program in neither Haskell or C++, but rather to implement the same algorithm in a straightforward way using standard library types and functions and see how well the respective compilers could optimize it. None of my original implementations were written with performance in mind, nor did I manually tune them. I used String in Haskell simply because that’s what string literals evaluate to. Likewise, I used Data.HashTable because it comes with GHC.

That said, to many Haskell developers the notion of standard types and functions extends to packages included in the Haskell Platform and/or easily accessible through cabal. Since this seems to be the prevailing view, it would be dishonest not to report results for a Haskell collocation counter that uses faster data structures. The rest of this article describes the new implementation and presents the new benchmark numbers.

Improved Collocation Counter

The improved Haskell implementation was contributed by Bas van Dijk. You can view the source here. Compared to the original version, the notable changes are:

Data.Textreplaces most uses ofStringData.HashMap.StrictreplacesData.HashTable- a two-level hash map replaces the original

- a faster version of

nubis used with performance instead of the defaultnub‘s - sentences are stored as words which avoids redundant splitting inside

sentenceCounts

The first two optimizations merely swapped data structures for faster implementations. The last three changed the overall algorithm and I had modify the C++ code accordingly in order to maintain a fair comparison. You can view the updated C++ code here.

Benchmark results follow.

Results

The hardware and software setup was the same as in the earlier benchmark, i.e. a 2.4GHz quad-core Q6600 Linux box with 6GB of memory running 64-bit Ubuntu 11.10. The benchmarks were compiled with -O2 optimizations using G++ 4.6.1 and GHC 7.0.3. Unless noted otherwise, all presented data points have been averaged over 30 runs. For individual run results, see data/collocations-{cpp,hs}-times.csv in the git repository.

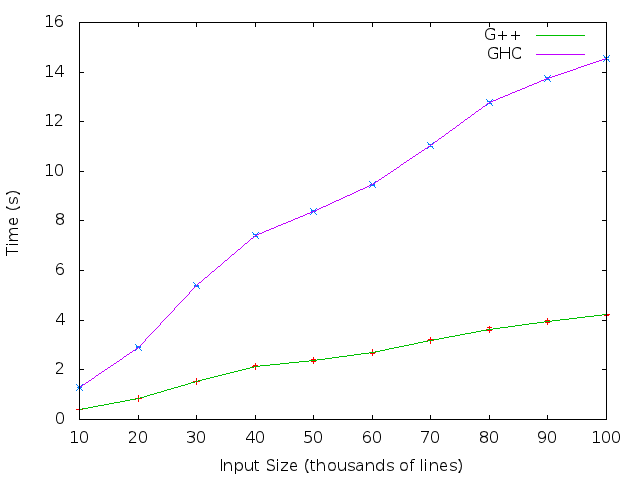

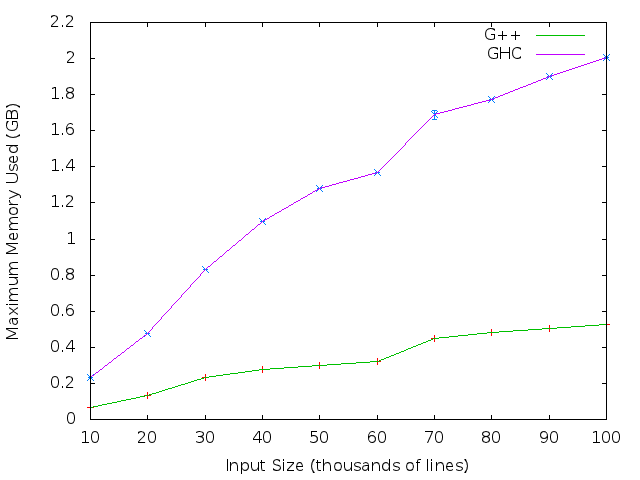

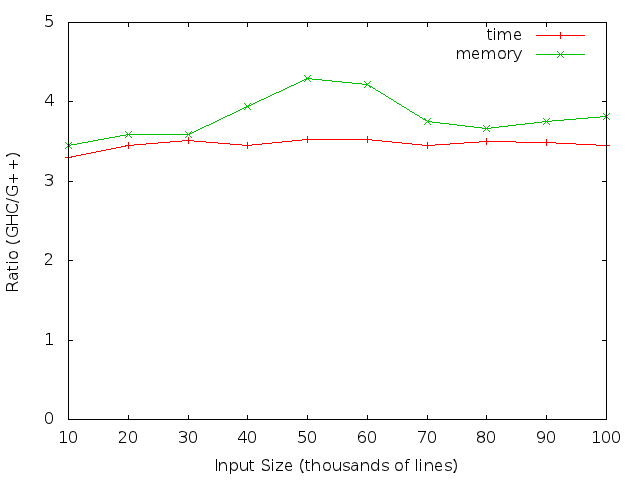

Figures 1 and 2 show the time and maximum memory used by the C++ and Haskell implementations as a function of input size in thousands of lines. Figure 3 shows the ratio of time and memory used by Haskell to the time and memory used by C++. On average Haskell was 3.47x slower and used 3.81x more memory than C++ on the same input. These relationships held regardless of input size, with a small deviation in the ratio of used memory between and .

Figure 1: Time taken by the C++ (G++) and Haskell (GHC) implementations as a function of input size. On average Haskell was 3.47x slower than C++ on the same input.

Figure 2: Maximum memory used by the C++ (G++) and Haskell (GHC) implementations as a function of input size. On average Haskell used 3.81x more memory than C++ on the same input.

Figure 3: Time and maximum memory used by the Haskell implementation relative to time and memory used by the C++ implementation. On average Haskell was 3.47x slower and used 3.81x more memory than C++ on the same input.

Tables 1 and 2 show the costliest calls (cumulative time) for the C++ and Haskell binaries for an input of 20 thousand lines. Please take these results with a grain of salt as they show only one run with compiler optimizations turned off. Due to space constraints, only the top functions are shown. For full information, the curious reader is welcome to look at the gprof and GHC profiler outputs.

| function | cumulative time (%) |

|---|---|

main

| 99.5 |

documentCounts

| 98.5 |

std::sort

| 42.1 |

paragraphCounts

| 42.0 |

sentenceCounts

| 13.5 |

words

| 4.0 |

nub

| 1.3 |

Table 1: Costliest calls (cumulative time) of the C++ collocation counter. For function details, see Colocations.cpp.

| function | cumulative time (%) |

|---|---|

main

| 73.5 |

serialize

| 31.6 |

countTable

| 19.1 |

counts

| 7.4 |

pairs

| 5.6 |

sentenceCounts

| 5.1 |

fastNub

| 4.2 |

convert

| 0.9 |

Table 2: Costliest calls (cumulative time) of the Haskell collocation counter. For function details, see Colocations.hs.

Conclusions

Bas van Dijk’s improvements to the Haskell collocation counter made its performance closer to the performance of the same algorithm implemented in C++. On average Haskell was 3.47x slower and used 3.81x more memory than C++ on the same input, which is a significant improvement from the previous 5.91x and 4.95x. Since I considered the old numbers to be pretty pretty good by themselves, I think this is a great result. A 3.47x slowdown is a small price to pay for the simplicity and convenience of Haskell as a language, and is a testament to how well GHC can optimize Haskell programs.

5 responses

Do you want to comment?

Comments RSS and TrackBack Identifier URI ?

Trackbacks